LangGraph

官方文档

按顺序进行

const model = new ChatOpenAI({

streaming: true,

openAIApiKey: modelSecret,

modelName: modelOptions.openaiModelType,

temperature: modelOptions.temperature,

});

const graph = new MessageGraph();

graph.addNode("oracle", async (state: BaseMessage[]) => {

return model.invoke(state);

});

graph.addEdge("oracle", END);

graph.setEntryPoint("oracle");

const runnable = graph.compile();

const results = await runnable.invoke(new HumanMessage("What is 1 + 1?"));

return res.status(200).json({

results,

});

{

"results": [

{

"lc": 1,

"type": "constructor",

"id": ["langchain_core", "messages", "HumanMessage"],

"kwargs": {

"content": "What is 1 + 1?",

"additional_kwargs": {},

"response_metadata": {}

}

},

{

"lc": 1,

"type": "constructor",

"id": ["langchain_core", "messages", "AIMessageChunk"],

"kwargs": {

"content": "1 + 1 equals 2.",

"additional_kwargs": {},

"response_metadata": {

"estimatedTokenUsage": {

"promptTokens": 15,

"completionTokens": 8,

"totalTokens": 23

},

"prompt": 0,

"completion": 0

},

"tool_call_chunks": [],

"tool_calls": [],

"invalid_tool_calls": []

}

}

]

}

使用条件支线

const model = new ChatOpenAI({

streaming: true,

openAIApiKey: modelSecret,

modelName: modelOptions.openaiModelType,

temperature: modelOptions.temperature,

}).bind({

tools: [convertToOpenAITool(new Calculator())],

tool_choice: "auto",

});

const graph = new MessageGraph();

graph.addNode("oracle", async (state: BaseMessage[]) => {

return model.invoke(state);

});

graph.addNode("calculator", async (state: BaseMessage[]) => {

const tool = new Calculator();

const toolCalls = state[state.length - 1].additional_kwargs.tool_calls ?? [];

const calculatorCall = toolCalls.find(

(toolCall) => toolCall.function.name === "calculator",

);

if (calculatorCall === undefined) {

throw new Error("Calculator call not found");

}

const result = await tool.invoke(

JSON.parse(calculatorCall.function.arguments),

);

return new ToolMessage({

tool_call_id: calculatorCall.id,

content: result,

});

});

graph.addEdge("calculator", END);

graph.setEntryPoint("oracle");

const router = (state: BaseMessage[]) => {

const toolCalls = state[state.length - 1].additional_kwargs.tool_calls ?? [];

if (toolCalls.length) {

return "calculator";

}

return "end";

};

graph.addConditionalEdges("oracle", router, {

calculator: "calculator",

end: END,

});

const runnable = graph.compile();

const results = await runnable.invoke(new HumanMessage("What is 1 + 1?"));

return res.status(200).json({

results,

});

{

// Input: "What is 1 + 1?"

"results": [

{

"lc": 1,

"type": "constructor",

"id": ["langchain_core", "messages", "HumanMessage"],

"kwargs": {

"content": "What is 1 + 1?",

"additional_kwargs": {},

"response_metadata": {}

}

},

{

"lc": 1,

"type": "constructor",

"id": ["langchain_core", "messages", "AIMessageChunk"],

"kwargs": {

"content": "",

"additional_kwargs": {

"tool_calls": [

{

"index": 0,

"id": "call_31gAUfN0KeI7O22p6Lt0avVq",

"type": "function",

"function": {

"name": "calculator",

"arguments": "{\"input\":\"1+1\"}"

}

}

]

},

"response_metadata": {

"estimatedTokenUsage": {

"promptTokens": 15,

"completionTokens": 0,

"totalTokens": 15

},

"prompt": 0,

"completion": 0

},

"tool_call_chunks": [],

"tool_calls": [],

"invalid_tool_calls": []

}

},

{

"lc": 1,

"type": "constructor",

"id": ["langchain_core", "messages", "ToolMessage"],

"kwargs": {

"tool_call_id": "call_31gAUfN0KeI7O22p6Lt0avVq",

"content": "2",

"additional_kwargs": {},

"response_metadata": {}

}

}

]

}

// Input: "What is your name?"

{

"results": [

{

"lc": 1,

"type": "constructor",

"id": [

"langchain_core",

"messages",

"HumanMessage"

],

"kwargs": {

"content": "What is your name?",

"additional_kwargs": {},

"response_metadata": {}

}

},

{

"lc": 1,

"type": "constructor",

"id": [

"langchain_core",

"messages",

"AIMessageChunk"

],

"kwargs": {

"content": "My name is Assistant. How can I assist you today?",

"additional_kwargs": {},

"response_metadata": {

"estimatedTokenUsage": {

"promptTokens": 12,

"completionTokens": 12,

"totalTokens": 24

},

"prompt": 0,

"completion": 0

},

"tool_call_chunks": [],

"tool_calls": [],

"invalid_tool_calls": []

}

}

]

}

搭建一个 cycle graph

const tools = [

new TavilySearchResults({ maxResults: 1 }), // Need to TAVILY_API_KEY in env

// new Calculator()

];

const toolExecutor = new ToolExecutor({ tools });

const model = new ChatOpenAI({

streaming: true,

openAIApiKey: modelSecret,

modelName: modelOptions.openaiModelType,

temperature: modelOptions.temperature,

});

const toolsAsOpenAIFunctions = tools.map((tool) =>

convertToOpenAIFunction(tool),

);

const model_with_tools = model.bind({

functions: toolsAsOpenAIFunctions,

});

const agentState = {

messages: {

value: (x: BaseMessage[], y: BaseMessage[]) => x.concat(y),

default: () => [],

},

};

const shouldContinue = (state: { messages: Array<BaseMessage> }) => {

const { messages } = state;

const lastMessage = messages[messages.length - 1];

if (

!("function_call" in lastMessage.additional_kwargs) ||

!lastMessage.additional_kwargs.function_call

) {

return "end";

}

return "continue";

};

const _getAction = (state: { messages: Array<BaseMessage> }): AgentAction => {

const { messages } = state;

const lastMessage = messages[messages.length - 1];

if (!lastMessage) {

throw new Error("No messages found");

}

if (!lastMessage.additional_kwargs.function_call) {

throw new Error("No function call found");

}

return {

tool: lastMessage.additional_kwargs.function_call.name,

toolInput: lastMessage.additional_kwargs.function_call.arguments,

log: "",

};

};

const callModel = async (state: { messages: Array<BaseMessage> }) => {

const { messages } = state;

const prompt = ChatPromptTemplate.fromMessages([

["system", "You are a helpful assistant."],

new MessagesPlaceholder("messages"),

]);

const response = await prompt.pipe(model_with_tools).invoke({ messages });

return {

messages: [response],

};

};

const callTool = async (state: { messages: Array<BaseMessage> }) => {

const action = _getAction(state);

const response = await toolExecutor.invoke(action);

const functionMessage = new FunctionMessage({

content: response,

name: action.tool,

});

return {

messages: [functionMessage],

};

};

const workflow = new StateGraph({

channels: agentState,

});

workflow.addNode("agent", callModel);

workflow.addNode("action", callTool);

workflow.setEntryPoint("agent");

workflow.addConditionalEdges("agent", shouldContinue, {

continue: "action",

end: END,

});

workflow.addEdge("action", "agent");

const app = workflow.compile();

const inputs = {

messages: [new HumanMessage("what is the weather in paris")],

};

const results = await app.invoke(inputs);

return res.status(200).json({

results,

});

{

"results": {

"messages": [

{

"lc": 1,

"type": "constructor",

"id": ["langchain_core", "messages", "HumanMessage"],

"kwargs": {

"content": "what is the weather in paris",

"additional_kwargs": {},

"response_metadata": {}

}

},

{

"lc": 1,

"type": "constructor",

"id": ["langchain_core", "messages", "AIMessageChunk"],

"kwargs": {

"content": "",

"additional_kwargs": {

"function_call": {

"name": "tavily_search_results_json",

"arguments": "{\"input\":\"current weather in Paris\"}"

}

},

"response_metadata": {

"estimatedTokenUsage": {

"promptTokens": 86,

"completionTokens": 21,

"totalTokens": 107

},

"prompt": 0,

"completion": 0

},

"tool_call_chunks": [],

"tool_calls": [],

"invalid_tool_calls": []

}

},

{

"lc": 1,

"type": "constructor",

"id": ["langchain_core", "messages", "FunctionMessage"],

"kwargs": {

"content": "[{\"title\":\"Weather in Paris\",\"url\":\"https://www.weatherapi.com/\",\"content\":\"{'location': {'name': 'Paris', 'region': 'Ile-de-France', 'country': 'France', 'lat': 48.87, 'lon': 2.33, 'tz_id': 'Europe/Paris', 'localtime_epoch': 1717085019, 'localtime': '2024-05-30 18:03'}, 'current': {'last_updated_epoch': 1717084800, 'last_updated': '2024-05-30 18:00', 'temp_c': 15.0, 'temp_f': 59.0, 'is_day': 1, 'condition': {'text': 'Light rain', 'icon': '//cdn.weatherapi.com/weather/64x64/day/296.png', 'code': 1183}, 'wind_mph': 10.5, 'wind_kph': 16.9, 'wind_degree': 300, 'wind_dir': 'WNW', 'pressure_mb': 1010.0, 'pressure_in': 29.83, 'precip_mm': 0.27, 'precip_in': 0.01, 'humidity': 72, 'cloud': 75, 'feelslike_c': 14.0, 'feelslike_f': 57.1, 'windchill_c': 12.7, 'windchill_f': 54.8, 'heatindex_c': 14.0, 'heatindex_f': 57.1, 'dewpoint_c': 11.6, 'dewpoint_f': 52.9, 'vis_km': 10.0, 'vis_miles': 6.0, 'uv': 3.0, 'gust_mph': 17.4, 'gust_kph': 28.1}}\",\"score\":0.98324,\"raw_content\":null}]",

"name": "tavily_search_results_json",

"additional_kwargs": {},

"response_metadata": {}

}

},

{

"lc": 1,

"type": "constructor",

"id": ["langchain_core", "messages", "AIMessageChunk"],

"kwargs": {

"content": "The current weather in Paris is as follows:\n- Temperature: 15.0°C (59.0°F)\n- Condition: Light rain\n- Wind: 16.9 km/h from WNW\n- Pressure: 1010.0 mb\n- Humidity: 72%\n- Cloudiness: 75%\n- Visibility: 10.0 km\n- UV Index: 3.0\n\nFor more details, you can visit [Weather in Paris](https://www.weatherapi.com/).",

"additional_kwargs": {},

"response_metadata": {

"estimatedTokenUsage": {

"promptTokens": 537,

"completionTokens": 104,

"totalTokens": 641

},

"prompt": 0,

"completion": 0

},

"tool_call_chunks": [],

"tool_calls": [],

"invalid_tool_calls": []

}

}

]

}

}

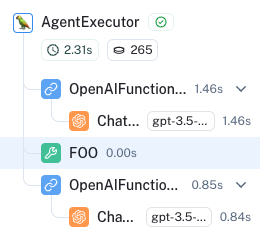

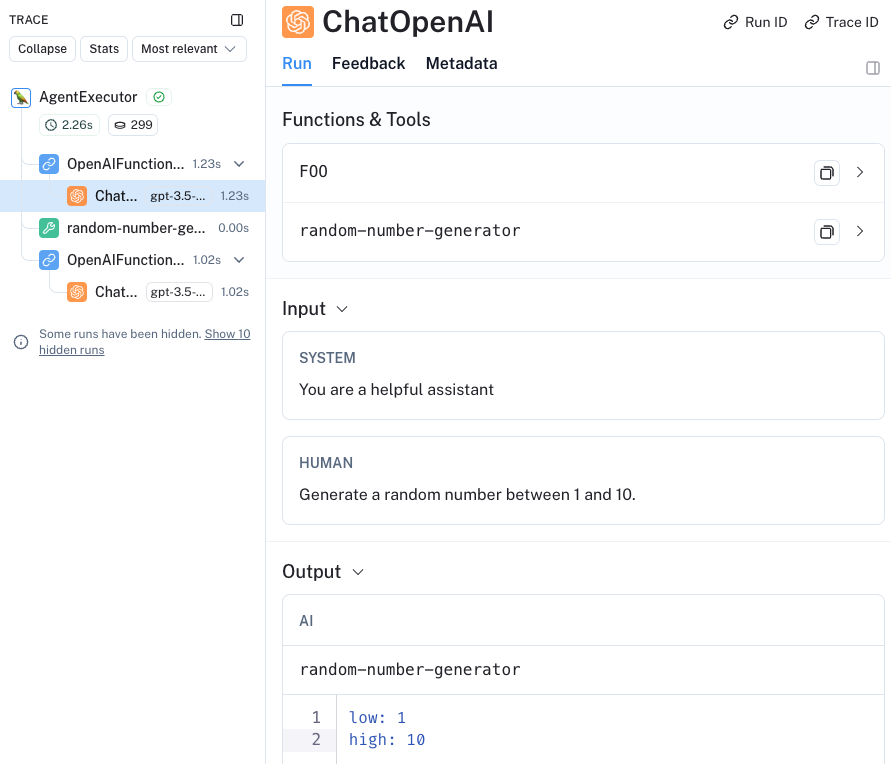

自定义 tools

const model = new ChatOpenAI({

streaming: true,

openAIApiKey: modelSecret,

modelName: modelOptions.openaiModelType,

temperature: modelOptions.temperature,

});

const tools = [

new DynamicTool({

name: "FOO",

description:

"call this to get the value of foo. input should be an empty string.",

func: async () => "baz",

}),

new DynamicStructuredTool({

name: "random-number-generator",

description: "generates a random number between two input numbers",

schema: z.object({

low: z.number().describe("The lower bound of the generated number"),

high: z.number().describe("The upper bound of the generated number"),

}),

func: async ({ low, high }) => {

return (Math.random() * (high - low) + low).toString();

},

}),

];

const prompt = await pull<ChatPromptTemplate>(

"hwchase17/openai-functions-agent",

);

const agent = await createOpenAIFunctionsAgent({

llm: model,

tools,

prompt,

});

const agentExecutor = new AgentExecutor({

agent,

tools,

verbose: true,

});

const results = await agentExecutor.invoke({

input: `What is the value of foo?`,

});

return res.status(200).json({

results,

});

{

"results": {

"input": "What is the value of foo?",

"output": "The value of foo is \"baz\"."

}

}

{

"results": {

"input": "Generate a random number between 1 and 10.",

"output": "I have generated a random number between 1 and 10 for you. The number is 4.31."

}

}

两个核心问题 ❤️

- 每个 agent 分别是做什么的?

- 每个 agent 之间是怎么联系的?

Resource

- 🦜🕸️LangGraph.js: https://js.langchain.com/v0.1/docs/langgraph/

- How to build your custom tool ?: https://js.langchain.com/v0.1/docs/modules/agents/tools/dynamic/

- Langchain Blog LangGraph: https://blog.langchain.dev/langgraph/

- LangGraph: Multi-Agent Workflows: https://blog.langchain.dev/langgraph-multi-agent-workflows/

- LangGraph: Multi-Agent Workflows - youtube: https://www.youtube.com/watch?v=hvAPnpSfSGo

- Hierarchical Agent Teams: https://github.com/langchain-ai/langgraphjs/blob/main/examples/multi_agent/hierarchical_agent_teams.ipynb