Kubernetes 理论与实践-4-资源管理

前文回顾

问题-为什么需要做资源管理?

如果没有指示容器需要多少 CPU 和内存,Kubernetes 别无选择,只能平等对待所有容器。这通常会导致资源使用分配非常不均匀。要求 Kubernetes 在没有资源规范的情况下调度容器,就像在没有船长的情况下上船一样。

实践-定义具有 resources 限制和请求的 pod

go-demo-2-random.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: go-demo-2

annotations:

kubernetes.io/ingress.class: "nginx"

ingress.kubernetes.io/ssl-redirect: "false"

nginx.ingress.kubernetes.io/ssl-redirect: "false"

spec:

rules:

- host: go-demo-2.com

http:

paths:

- path: /demo

pathType: ImplementationSpecific

backend:

service:

name: go-demo-2-api

port:

number: 8080

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-demo-2-db

spec:

selector:

matchLabels:

type: db

service: go-demo-2

strategy:

type: Recreate

template:

metadata:

labels:

type: db

service: go-demo-2

vendor: MongoLabs

spec:

containers:

- name: db

image: mongo:3.3

resources:

limits:

memory: 200Mi

cpu: 0.5

requests:

memory: 100Mi

cpu: 0.3

---

apiVersion: v1

kind: Service

metadata:

name: go-demo-2-db

spec:

ports:

- port: 27017

selector:

type: db

service: go-demo-2

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-demo-2-api

spec:

replicas: 3

selector:

matchLabels:

type: api

service: go-demo-2

template:

metadata:

labels:

type: api

service: go-demo-2

language: go

spec:

containers:

- name: api

image: vfarcic/go-demo-2

env:

- name: DB

value: go-demo-2-db

readinessProbe:

httpGet:

path: /demo/hello

port: 8080

periodSeconds: 1

livenessProbe:

httpGet:

path: /demo/hello

port: 8080

resources:

limits:

memory: 100Mi

cpu: 200m

requests:

memory: 50Mi

cpu: 100m

---

apiVersion: v1

kind: Service

metadata:

name: go-demo-2-api

spec:

ports:

- port: 8080

selector:

type: api

service: go-demo-2

定义-资源单位

CPU 资源

CPU 资源以 CPU units 为单位。它可以是 1 ,等价 1000m(millicpu),也可以被分割成 0.5(500m), 0.2(200m), 0.1(100m)。

内存单元

字节: byte(8 bits)

K(Kilobyte)K(千字节)M(Megabyte)M(兆字节)G(Gigabyte)G(千兆字节)T(Terabyte)T(兆字节)P(Petabyte)P(PB 级)E(Exabyte)E(EB 级)

回到 go-demo-2-random.yml 定义,我们将看到数据库容器的限制设置为 200Mi(200 兆字节),请求设置为 100Mi(100 兆字节)。

定义-Kubernetes 限制和请求

- Limits 限制: 表示 pod 能够使用的资源上限,一旦超过则会重启或终止(看 pod 容器的重启策略)。

- Requests 请求: 请求表示预期的资源利用率。Kubernetes 使用它们来决定将 Pod 放置在何处,具体取决于构成集群的节点的实际资源利用率。

- 超过请求资源时,它可能会被调度到其他资源更多的节点;

- 如果缺少更大的节点资源,它会进入 pending 状态。

实践-创建资源查看限制和请求

kubectl create \

-f go-demo-2-random.yml \

--record --save-config

kubectl rollout status \

deployment go-demo-2-api

kubectl describe deploy go-demo-2-api

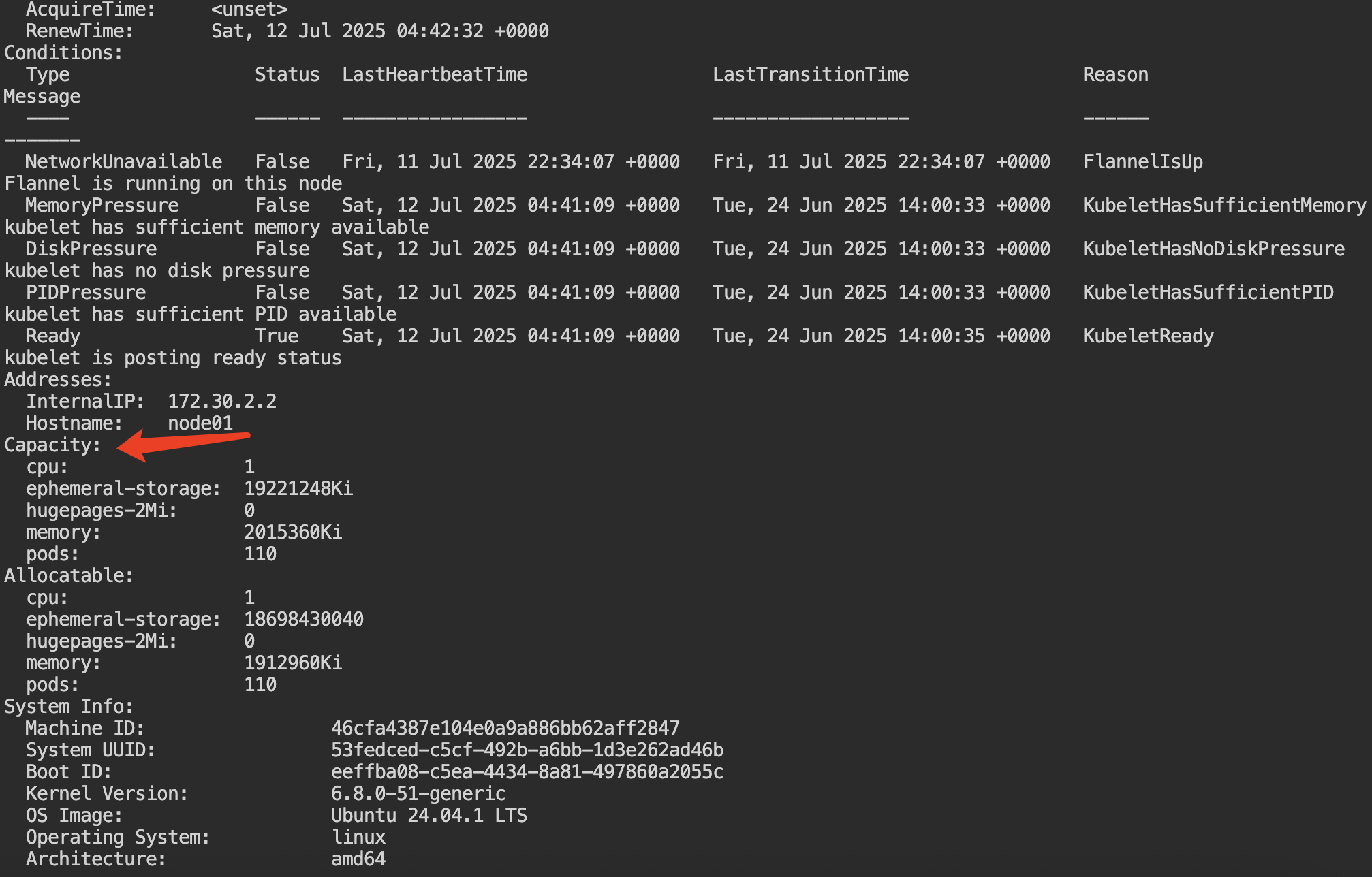

查看节点信息

kubectl describe nodes

- Capacity: 1 CPU, 2GB RAM, 最多运行 110 个 pod。

- Non-terminated Pods: 所有具有 CPU 和 RAM 限制以及请求的 Pod。

- Allocated resources: 提供所有 Pod 限制和请求的求和值

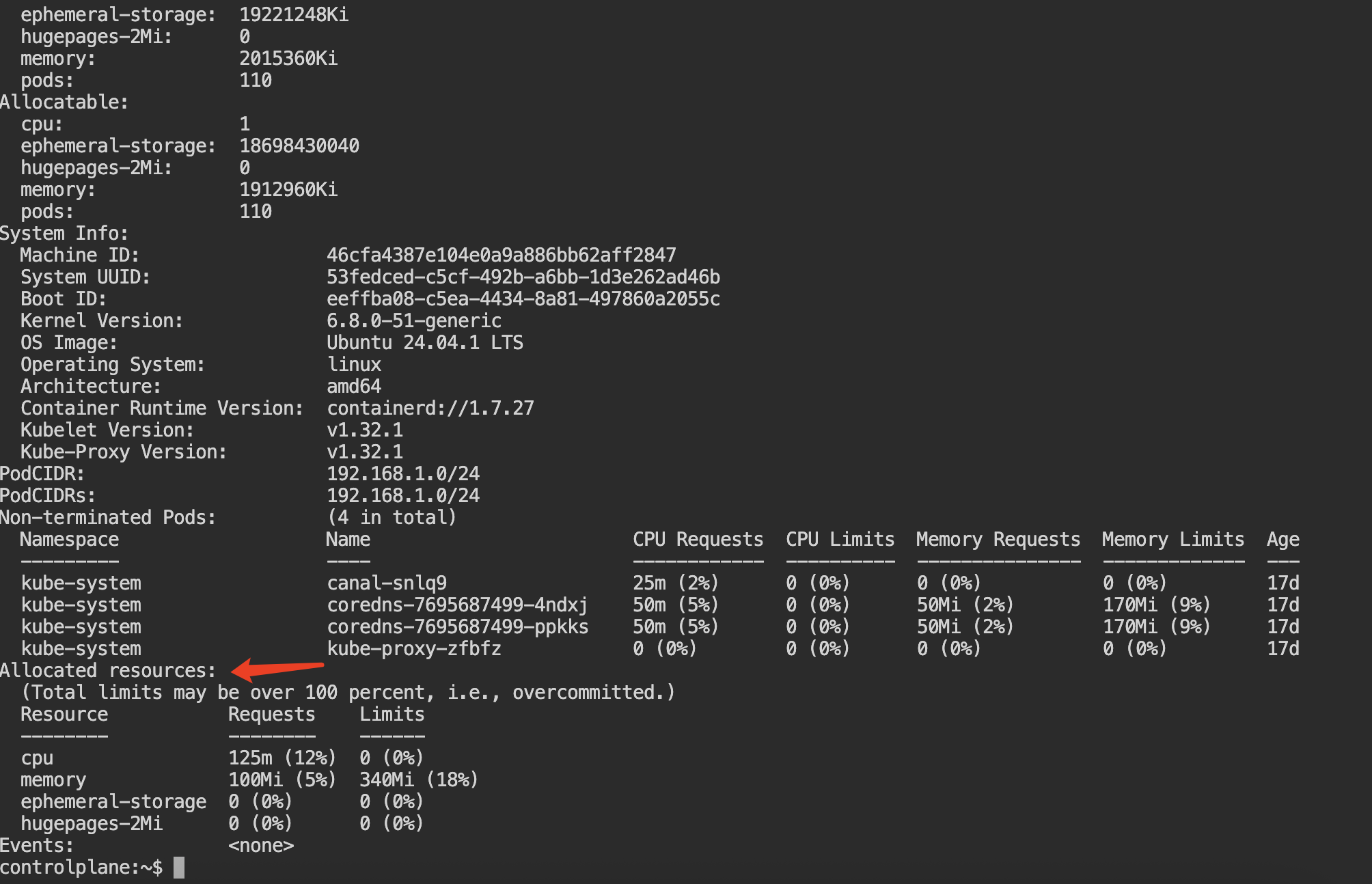

实践-使用 Metrics Server 测量实际内存和 CPU 的消耗

Metrics Server 收集和解释各种信号,例如计算资源使用情况、生命周期事件等。在我们的例子中,我们只对集群中运行的容器的 CPU 和内存消耗感兴趣。

监控系统设置建议: 将 Prometheus 与 Kubernetes API 相结合作为指标来源,并将 Alertmanager 相结合来满足我们的告警需求。

kubectl --namespace kube-system \

get pods

metrics-server 正在运行。

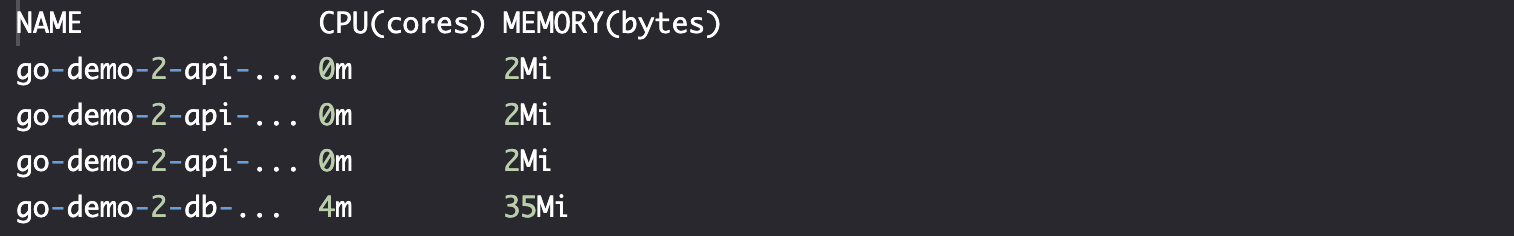

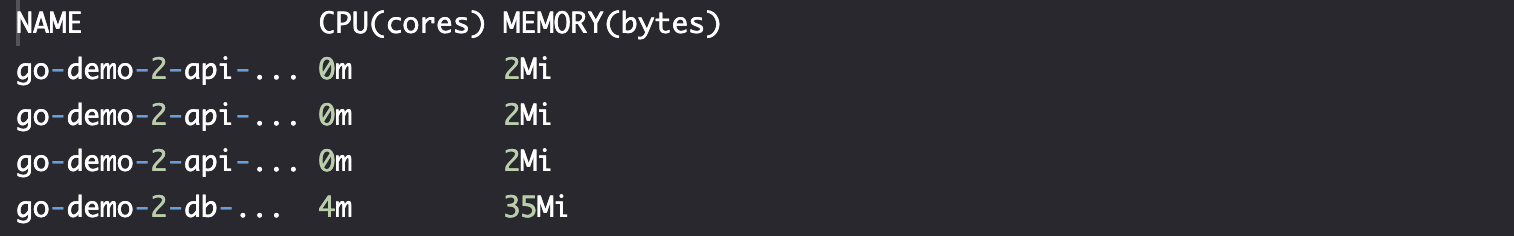

kubectl top pods

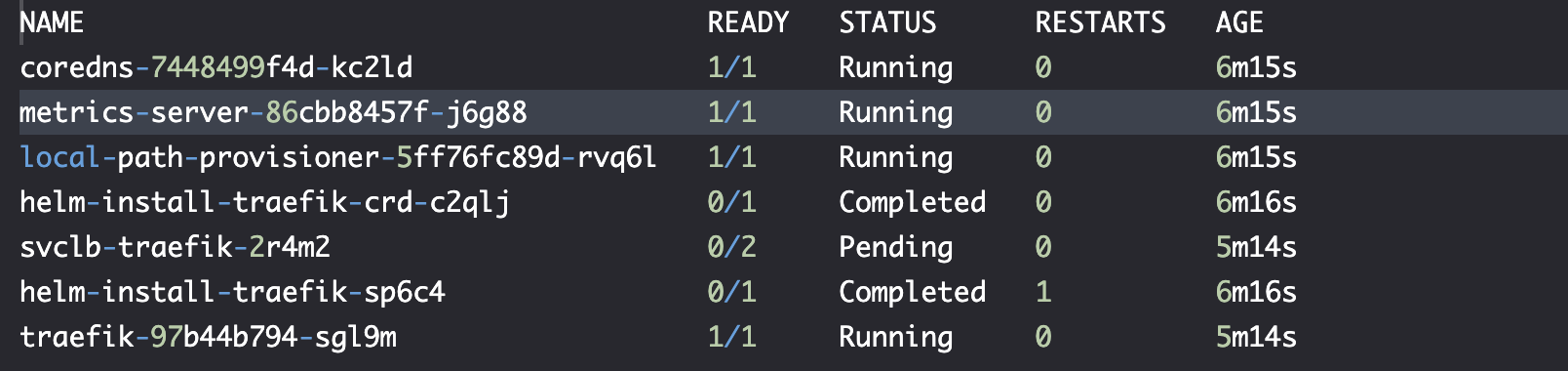

我们看到 DB 的内存使用量是 35Mi,但我们之前设置了 100Mi,这意味着我们高估了 DB 对内存的消耗;同样的道理,CPU 也是一样,实际消耗 0.005CPU,我们请求了 0.3CPU。

实践-分配资源

情况 1-不满足实际使用情况

go-demo-2-insuf-mem.yml

内存限制设置为 20Mi,请求设置为 10Mi。由于我们已经从 Metrics Server 的数据中知道,MongoDB 需要 35Mi 左右,因此这次的内存资源比实际使用量要低很多。

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: go-demo-2

annotations:

kubernetes.io/ingress.class: "nginx"

ingress.kubernetes.io/ssl-redirect: "false"

nginx.ingress.kubernetes.io/ssl-redirect: "false"

spec:

rules:

- host: go-demo-2.com

http:

paths:

- path: /demo

pathType: ImplementationSpecific

backend:

service:

name: go-demo-2-api

port:

number: 8080

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-demo-2-db

spec:

selector:

matchLabels:

type: db

service: go-demo-2

strategy:

type: Recreate

template:

metadata:

labels:

type: db

service: go-demo-2

vendor: MongoLabs

spec:

containers:

- name: db

image: mongo:3.3

resources:

limits:

memory: 20Mi

cpu: 0.5

requests:

memory: 10Mi

cpu: 0.3

---

apiVersion: v1

kind: Service

metadata:

name: go-demo-2-db

spec:

ports:

- port: 27017

selector:

type: db

service: go-demo-2

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-demo-2-api

spec:

replicas: 3

selector:

matchLabels:

type: api

service: go-demo-2

template:

metadata:

labels:

type: api

service: go-demo-2

language: go

spec:

containers:

- name: api

image: vfarcic/go-demo-2

env:

- name: DB

value: go-demo-2-db

readinessProbe:

httpGet:

path: /demo/hello

port: 8080

periodSeconds: 1

livenessProbe:

httpGet:

path: /demo/hello

port: 8080

resources:

limits:

memory: 100Mi

cpu: 200m

requests:

memory: 50Mi

cpu: 100m

---

apiVersion: v1

kind: Service

metadata:

name: go-demo-2-api

spec:

ports:

- port: 8080

selector:

type: api

service: go-demo-2

创建资源

kubectl apply \

-f go-demo-2-insuf-mem.yml \

--record

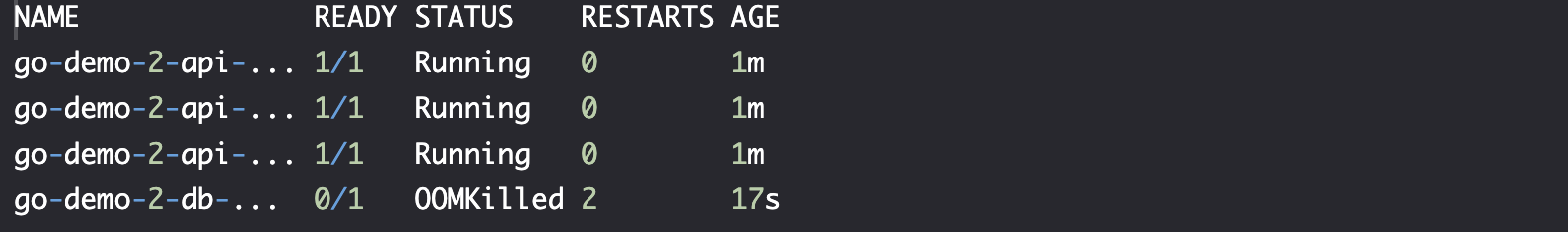

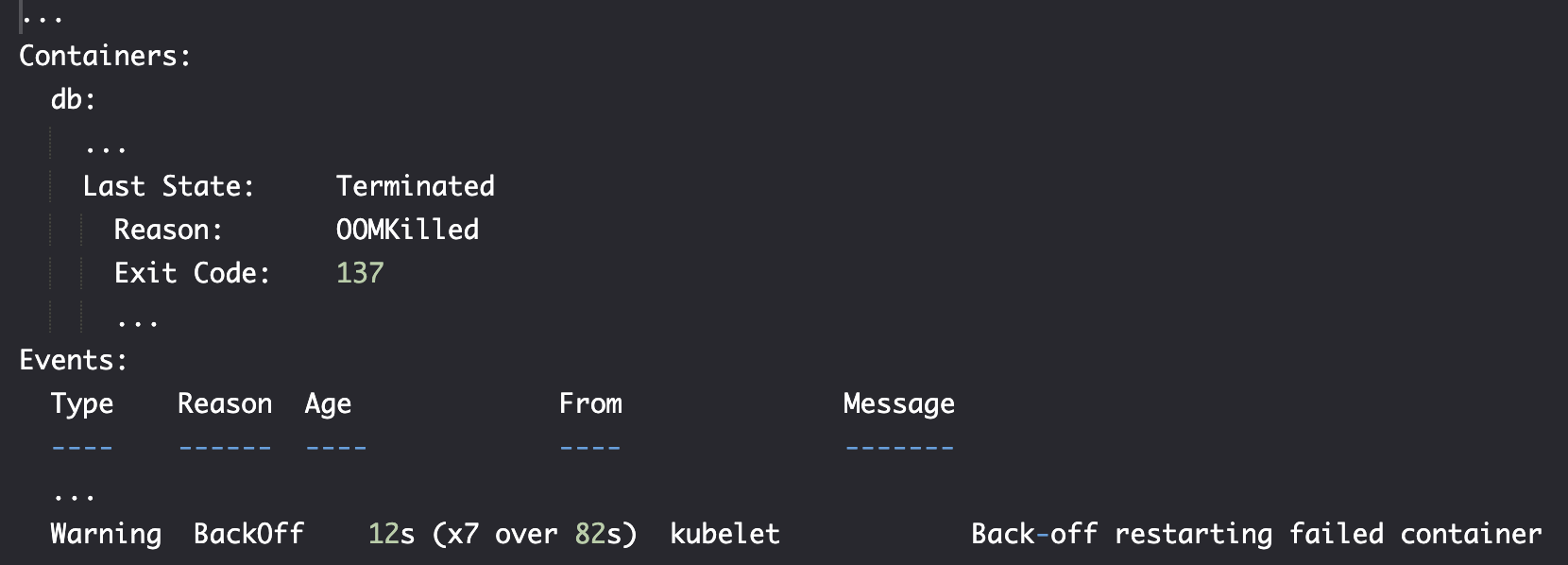

kubectl get pods

OOMKilled: 内存不足已终止,DB Pod 会不断重启,但是每次都会出现 OOMKilled 问题。

kubectl describe pod go-demo-2-db

情况 2-分配过多资源

go-demo-2-insuf-node.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: go-demo-2

annotations:

kubernetes.io/ingress.class: "nginx"

ingress.kubernetes.io/ssl-redirect: "false"

nginx.ingress.kubernetes.io/ssl-redirect: "false"

spec:

rules:

- host: go-demo-2.com

http:

paths:

- path: /demo

pathType: ImplementationSpecific

backend:

service:

name: go-demo-2-api

port:

number: 8080

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-demo-2-db

spec:

selector:

matchLabels:

type: db

service: go-demo-2

strategy:

type: Recreate

template:

metadata:

labels:

type: db

service: go-demo-2

vendor: MongoLabs

spec:

containers:

- name: db

image: mongo:3.3

resources:

limits:

memory: 12Gi

cpu: 0.5

requests:

memory: 12Gi

cpu: 0.3

---

apiVersion: v1

kind: Service

metadata:

name: go-demo-2-db

spec:

ports:

- port: 27017

selector:

type: db

service: go-demo-2

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-demo-2-api

spec:

replicas: 3

selector:

matchLabels:

type: api

service: go-demo-2

template:

metadata:

labels:

type: api

service: go-demo-2

language: go

spec:

containers:

- name: api

image: vfarcic/go-demo-2

env:

- name: DB

value: go-demo-2-db

readinessProbe:

httpGet:

path: /demo/hello

port: 8080

periodSeconds: 1

livenessProbe:

httpGet:

path: /demo/hello

port: 8080

resources:

limits:

memory: 100Mi

cpu: 200m

requests:

memory: 50Mi

cpu: 100m

---

apiVersion: v1

kind: Service

metadata:

name: go-demo-2-api

spec:

ports:

- port: 8080

selector:

type: api

service: go-demo-2

创建资源

kubectl apply \

-f go-demo-2-insuf-node.yml \

--record

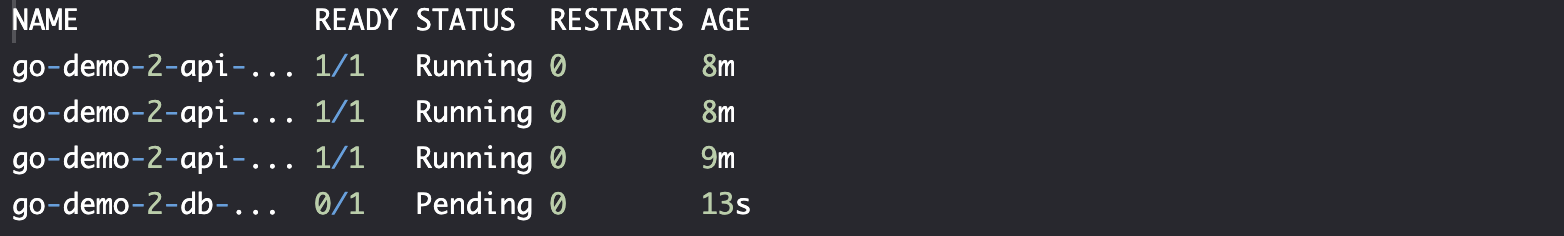

kubectl get pods

在调度过程中,Kubernetes 对 Pod 的请求求和,并寻找具有足够可用内存和 CPU 的节点。如果 Pod 的请求无法得到满足,则会将其置于 pending 状态,以期释放其中一个节点上的资源,或者将新的服务器添加到集群中。

由于在我们的例子中不会发生这样的事情,DB 尝试请求 12Gi 的内存资源,但是我们的节点内存资源不足。因此通过 go-demo-2-db Deployment 创建的 Pod 将永远处于等待状态,除非我们再次更改内存请求。

回到初始定义的资源情况。

kubectl apply \

-f go-demo-2-random.yml \

--record

kubectl rollout status \

deployment go-demo-2-db

kubectl rollout status \

deployment go-demo-2-api

情况 3-根据实际使用情况调整资源

容器情况

kubectl top pods

正如预期的那样,API 容器使用的资源比 MongoDB 还要少。它的内存在 2 英里到 6 英里之间。它的 CPU 使用率非常低,以至于 Metrics Server 将其四舍五入为 0m。

这里的指标是在容器什么都不做的情况下,生产环境会有真实的资源使用量。放到生产环境之前做压力测试能够帮助我们找到合适的资源限制和请求值。但要记住,只有生产才能提供真正的指标。

调整资源

资源请求仅略高于当前使用情况。我们将内存限制值设置为请求的两倍,以便应用程序有足够的资源来应对偶尔(和短暂的)额外内存消耗。CPU 限制远高于请求,主要是因为将小于 CPU 十分之一(minimum value: 0.1)的任何限制作为限制都是不好的。

关键是请求接近观察到的使用情况,并且限制更高,以便在资源使用量出现临时峰值的情况下,应用程序有缓冲空间。

go-demo-2.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: go-demo-2

annotations:

kubernetes.io/ingress.class: "nginx"

ingress.kubernetes.io/ssl-redirect: "false"

nginx.ingress.kubernetes.io/ssl-redirect: "false"

spec:

rules:

- host: go-demo-2.com

http:

paths:

- path: /demo

pathType: ImplementationSpecific

backend:

service:

name: go-demo-2-api

port:

number: 8080

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-demo-2-db

spec:

selector:

matchLabels:

type: db

service: go-demo-2

strategy:

type: Recreate

template:

metadata:

labels:

type: db

service: go-demo-2

vendor: MongoLabs

spec:

containers:

- name: db

image: mongo:3.3

resources:

limits:

memory: 100Mi

cpu: 0.1

requests:

memory: 50Mi

cpu: 0.01

---

apiVersion: v1

kind: Service

metadata:

name: go-demo-2-db

spec:

ports:

- port: 27017

selector:

type: db

service: go-demo-2

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-demo-2-api

spec:

replicas: 3

selector:

matchLabels:

type: api

service: go-demo-2

template:

metadata:

labels:

type: api

service: go-demo-2

language: go

spec:

containers:

- name: api

image: vfarcic/go-demo-2

env:

- name: DB

value: go-demo-2-db

readinessProbe:

httpGet:

path: /demo/hello

port: 8080

periodSeconds: 1

livenessProbe:

httpGet:

path: /demo/hello

port: 8080

resources:

limits:

memory: 20Mi

cpu: 0.1

requests:

memory: 10Mi

cpu: 0.01

---

apiVersion: v1

kind: Service

metadata:

name: go-demo-2-api

spec:

ports:

- port: 8080

selector:

type: api

service: go-demo-2

定义-服务质量合同(QoS, Quality of Service)

定义与作用

当请求的资源超过 Kubernestes 的资源总量时,Kubernestes 会基于 服务质量(QoS)优先级决定销毁哪些容器。

类型

- Guaranteed

- Burstable

- BestEffort

Guaranteed QoS

保证 QoS 仅分配给已为其所有容器设置了 CPU 请求和限制以及内存请求和限制的 Pod。

- 必须设置内存和 CPU 限制。

- 内存和 CPU 请求必须设置为与限制相同的值,或者它们可以留空,在这种情况下,它们默认为限制。

分配了 Guaranteed QoS 的 Pod 是最高优先级,除非它们超出其限制或不健康,否则永远不会终止。 当资源不足时,他们是最后一个离开的。只要 Pod 的资源使用量在限制范围内,当资源使用量超过容量时,Kubernetes 将始终选择终止具有其他 QoS 分配的 Pod。

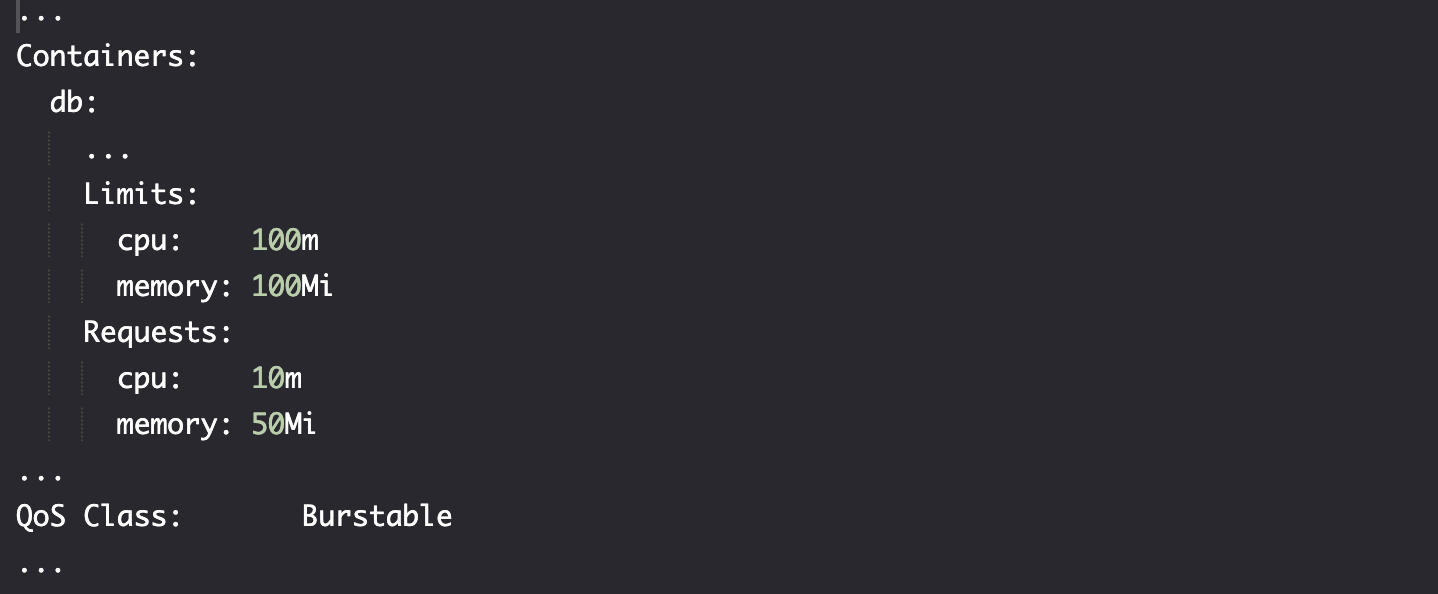

Burstable QoS

可突增 QoS 分配给不符合保证 QoS 标准但至少有一个定义了内存或 CPU 请求的容器的 Pod。

分配了 Burstable QoS 的 Pod 是中等优先级。 如果系统面临压力并需要更多可用内存,则当没有具有 BestEffort QoS 的 Pod 时,属于具有突发 QoS 的 Pod 的容器比属于具有保证 QoS 的容器更有可能被终止。

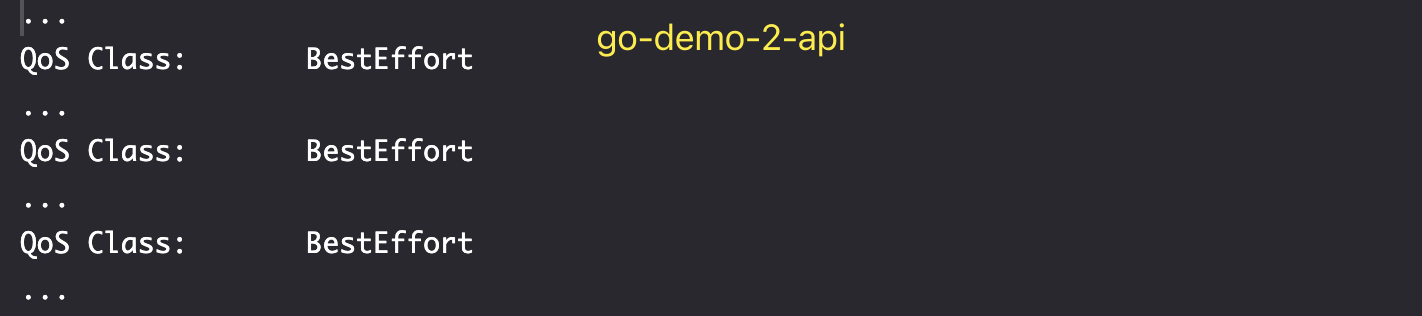

BestEffort QoS

它们是由未定义任何资源的容器组成的 Pod。Pods 中被认定为 BestEffort 的容器可以使用它们需要的任何可用内存。

它们的优先级最低,因此当需要更多内存时,它们首先消失。 当需要更多资源时,Kubernetes 将首先终止具有 BestEffort QoS 属性的 Pod 容器。

实践-查看 QoS 的实际应用

kubectl describe pod go-demo-2-db

修改资源分配

go-demo-2-quos.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: go-demo-2

annotations:

kubernetes.io/ingress.class: "nginx"

ingress.kubernetes.io/ssl-redirect: "false"

nginx.ingress.kubernetes.io/ssl-redirect: "false"

spec:

rules:

- host: go-demo-2.com

http:

paths:

- path: /demo

pathType: ImplementationSpecific

backend:

service:

name: go-demo-2-api

port:

number: 8080

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-demo-2-db

spec:

selector:

matchLabels:

type: db

service: go-demo-2

strategy:

type: Recreate

template:

metadata:

labels:

type: db

service: go-demo-2

vendor: MongoLabs

spec:

containers:

- name: db

image: mongo:3.3

resources:

limits:

memory: "50Mi"

cpu: 0.1

requests:

memory: "50Mi"

cpu: 0.1

---

apiVersion: v1

kind: Service

metadata:

name: go-demo-2-db

spec:

ports:

- port: 27017

selector:

type: db

service: go-demo-2

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-demo-2-api

spec:

replicas: 3

selector:

matchLabels:

type: api

service: go-demo-2

template:

metadata:

labels:

type: api

service: go-demo-2

language: go

spec:

containers:

- name: api # without any limits and requests here

image: vfarcic/go-demo-2

env:

- name: DB

value: go-demo-2-db

readinessProbe:

httpGet:

path: /demo/hello

port: 8080

periodSeconds: 1

livenessProbe:

httpGet:

path: /demo/hello

port: 8080

---

apiVersion: v1

kind: Service

metadata:

name: go-demo-2-api

spec:

ports:

- port: 8080

selector:

type: api

service: go-demo-2

应用修改

kubectl apply \

-f go-demo-2-qos.yml \

--record

kubectl rollout status \

deployment go-demo-2-db

Verify

kubectl describe pod go-demo-2-db

kubectl describe pod go-demo-2-api

实践-命名空间中的资源默认值和限制

查看命名空间初始值设置

kubectl create ns test

定义资源限制

limit-range.yml

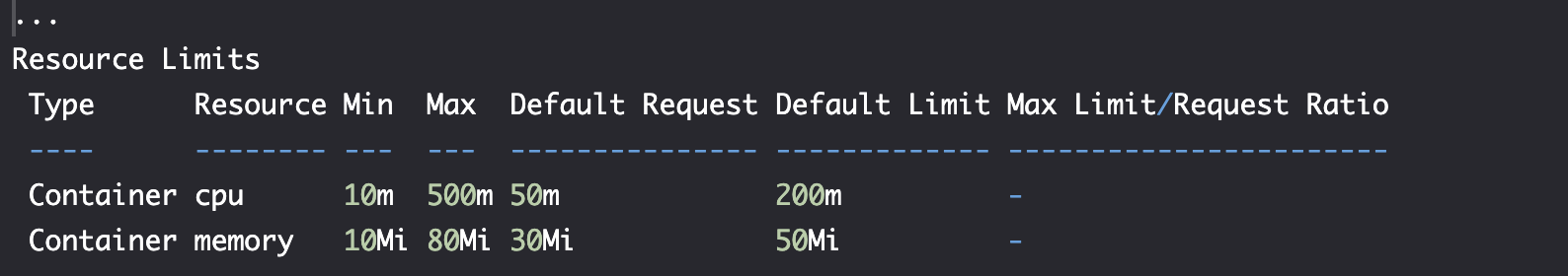

default(for limits) 和defaultRequest(for requests) 条目将应用于未指定资源的容器。如果容器没有内存或 CPU 限制,则会为其分配LimitRange中设置的值。- 当容器确实定义了资源时,将根据指定为 max 和 min 的 LimitRange 阈值评估这些资源。如果容器不满足条件,则不会创建托管容器的 Pod。

apiVersion: v1

kind: LimitRange

metadata:

name: limit-range

spec:

limits:

- default: # for limits

memory: 50Mi

cpu: 0.2

defaultRequest: # for requests

memory: 30Mi

cpu: 0.05

max:

memory: 80Mi

cpu: 0.5

min:

memory: 10Mi

cpu: 0.01

type: Container

创建资源限制

kubectl --namespace test create \

-f limit-range.yml \

--save-config --record

查看

kubectl describe namespace test

测试新部署没有资源限制的 pod 容器

go-demo-2-no-res.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: go-demo-2

annotations:

kubernetes.io/ingress.class: "nginx"

ingress.kubernetes.io/ssl-redirect: "false"

nginx.ingress.kubernetes.io/ssl-redirect: "false"

spec:

rules:

- host: go-demo-2.com

http:

paths:

- path: /demo

pathType: ImplementationSpecific

backend:

service:

name: go-demo-2-api

port:

number: 8080

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-demo-2-db

spec:

selector:

matchLabels:

type: db

service: go-demo-2

strategy:

type: Recreate

template:

metadata:

labels:

type: db

service: go-demo-2

vendor: MongoLabs

spec:

containers:

- name: db

image: mongo:3.3

---

apiVersion: v1

kind: Service

metadata:

name: go-demo-2-db

spec:

ports:

- port: 27017

selector:

type: db

service: go-demo-2

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-demo-2-api

spec:

replicas: 3

selector:

matchLabels:

type: api

service: go-demo-2

template:

metadata:

labels:

type: api

service: go-demo-2

language: go

spec:

containers:

- name: api

image: vfarcic/go-demo-2

env:

- name: DB

value: go-demo-2-db

readinessProbe:

httpGet:

path: /demo/hello

port: 8080

periodSeconds: 1

livenessProbe:

httpGet:

path: /demo/hello

port: 8080

---

apiVersion: v1

kind: Service

metadata:

name: go-demo-2-api

spec:

ports:

- port: 8080

selector:

type: api

service: go-demo-2

kubectl --namespace test create \

-f go-demo-2-no-res.yml \

--save-config --record

kubectl --namespace test \

rollout status \

deployment go-demo-2-api

kubectl --namespace test describe \

pod go-demo-2-db

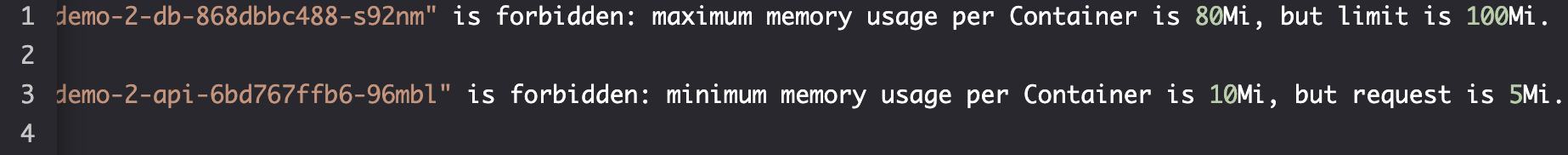

资源不匹配场景

go-demo-2.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: go-demo-2

annotations:

kubernetes.io/ingress.class: "nginx"

ingress.kubernetes.io/ssl-redirect: "false"

nginx.ingress.kubernetes.io/ssl-redirect: "false"

spec:

rules:

- host: go-demo-2.com

http:

paths:

- path: /demo

pathType: ImplementationSpecific

backend:

service:

name: go-demo-2-api

port:

number: 8080

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-demo-2-db

spec:

selector:

matchLabels:

type: db

service: go-demo-2

strategy:

type: Recreate

template:

metadata:

labels:

type: db

service: go-demo-2

vendor: MongoLabs

spec:

containers:

- name: db

image: mongo:3.3

resources:

limits:

memory: 100Mi

cpu: 0.1

requests:

memory: 50Mi

cpu: 0.01

---

apiVersion: v1

kind: Service

metadata:

name: go-demo-2-db

spec:

ports:

- port: 27017

selector:

type: db

service: go-demo-2

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-demo-2-api

spec:

replicas: 3

selector:

matchLabels:

type: api

service: go-demo-2

template:

metadata:

labels:

type: api

service: go-demo-2

language: go

spec:

containers:

- name: api

image: vfarcic/go-demo-2

env:

- name: DB

value: go-demo-2-db

readinessProbe:

httpGet:

path: /demo/hello

port: 8080

periodSeconds: 1

livenessProbe:

httpGet:

path: /demo/hello

port: 8080

resources:

limits:

memory: 20Mi

cpu: 0.1

requests:

memory: 10Mi

cpu: 0.01

---

apiVersion: v1

kind: Service

metadata:

name: go-demo-2-api

spec:

ports:

- port: 8080

selector:

type: api

service: go-demo-2

创建资源

kubectl --namespace test apply \

-f go-demo-2.yml \

--record

kubectl --namespace test \

get events \

--watch

无法正常创建。

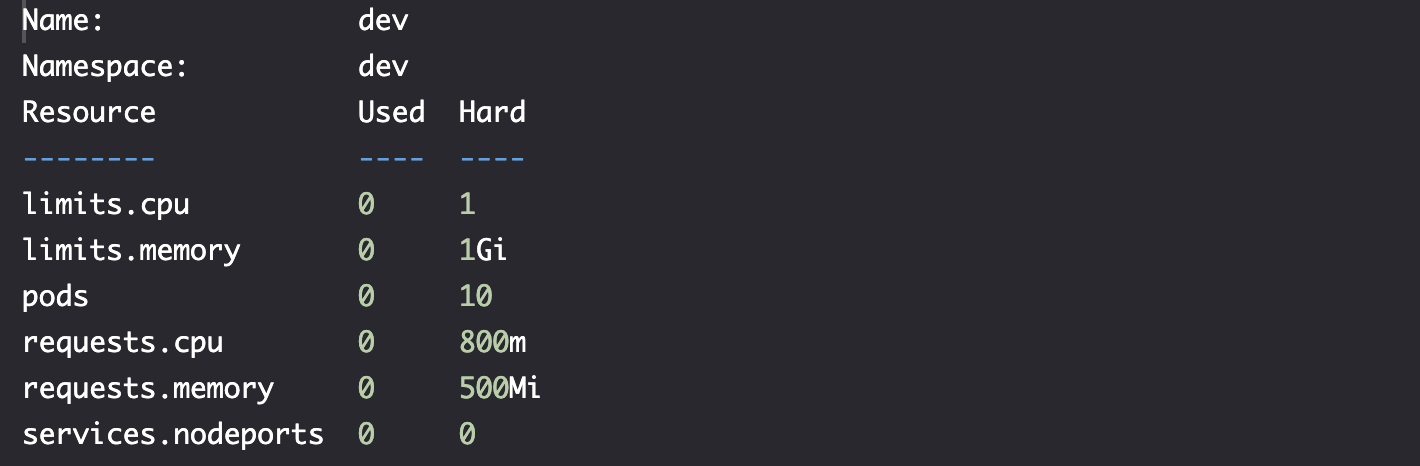

实践-定义命名空间配额 ResourceQuota

dev.yaml

apiVersion: v1

kind: Namespace

metadata:

name: dev

---

apiVersion: v1

kind: ResourceQuota

metadata:

name: dev

namespace: dev

spec:

hard:

requests.cpu: 0.8

requests.memory: 500Mi

limits.cpu: 1

limits.memory: 1Gi

pods: 10

services.nodeports: "0" # 禁止使用 nodeports

违反情况 1-pods 数量

创建命名空间

kubectl create ns dev

kubectl create \

-f dev.yml \

--record --save-config

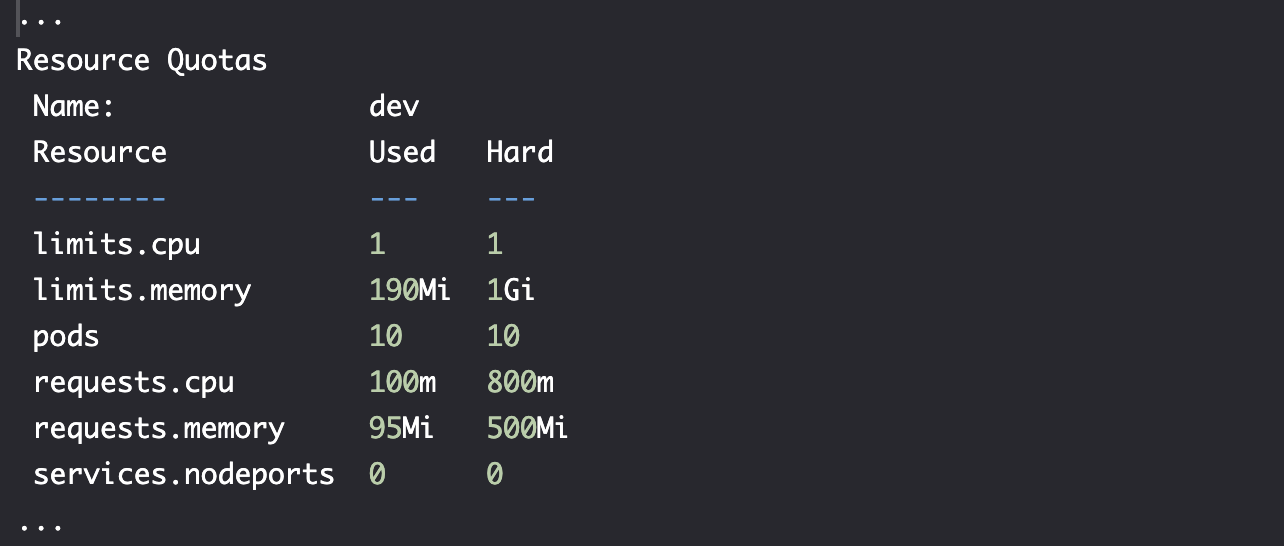

kubectl --namespace dev describe \

quota dev

创建资源

go-demo-2.yml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: go-demo-2

annotations:

kubernetes.io/ingress.class: "nginx"

ingress.kubernetes.io/ssl-redirect: "false"

nginx.ingress.kubernetes.io/ssl-redirect: "false"

spec:

rules:

- host: go-demo-2.com

http:

paths:

- path: /demo

pathType: ImplementationSpecific

backend:

service:

name: go-demo-2-api

port:

number: 8080

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-demo-2-db

spec:

selector:

matchLabels:

type: db

service: go-demo-2

strategy:

type: Recreate

template:

metadata:

labels:

type: db

service: go-demo-2

vendor: MongoLabs

spec:

containers:

- name: db

image: mongo:3.3

resources:

limits:

memory: 100Mi

cpu: 0.1

requests:

memory: 50Mi

cpu: 0.01

---

apiVersion: v1

kind: Service

metadata:

name: go-demo-2-db

spec:

ports:

- port: 27017

selector:

type: db

service: go-demo-2

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-demo-2-api

spec:

replicas: 3

selector:

matchLabels:

type: api

service: go-demo-2

template:

metadata:

labels:

type: api

service: go-demo-2

language: go

spec:

containers:

- name: api

image: vfarcic/go-demo-2

env:

- name: DB

value: go-demo-2-db

readinessProbe:

httpGet:

path: /demo/hello

port: 8080

periodSeconds: 1

livenessProbe:

httpGet:

path: /demo/hello

port: 8080

resources:

limits:

memory: 20Mi

cpu: 0.1

requests:

memory: 10Mi

cpu: 0.01

---

apiVersion: v1

kind: Service

metadata:

name: go-demo-2-api

spec:

ports:

- port: 8080

selector:

type: api

service: go-demo-2

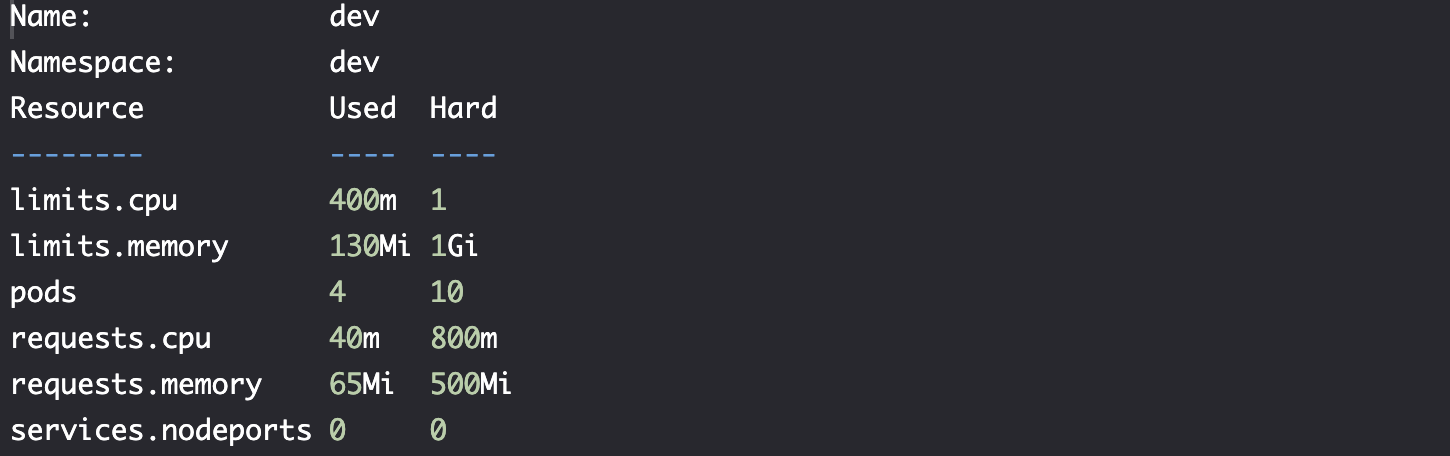

kubectl --namespace dev create \

-f go-demo-2.yml \

--save-config --record

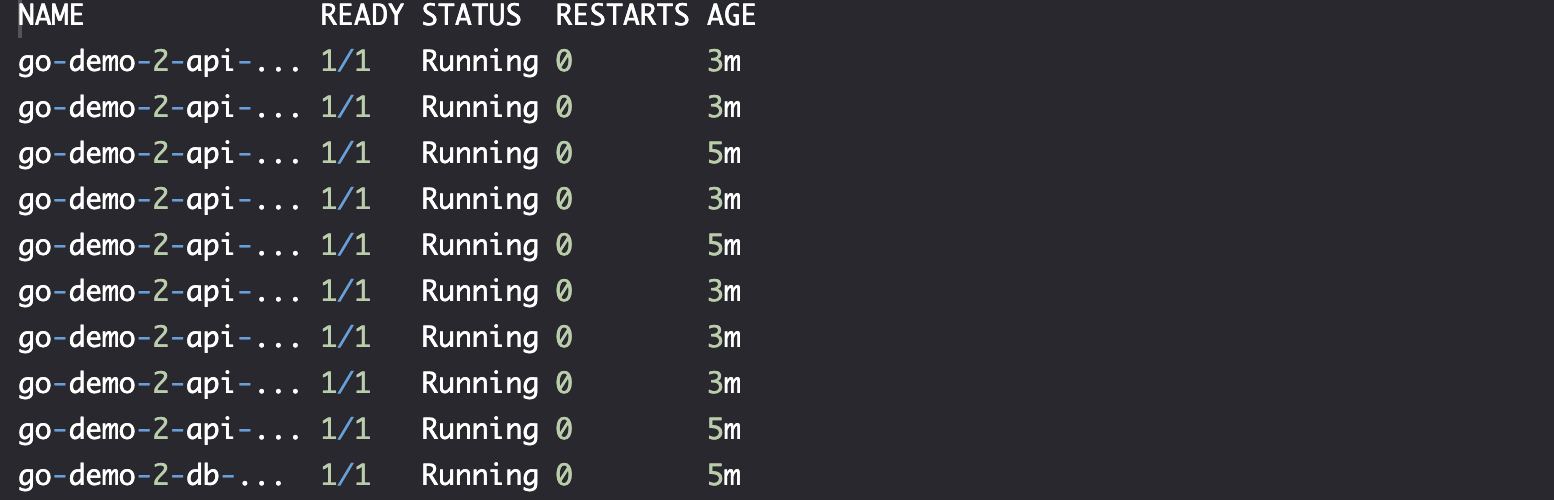

kubectl --namespace dev \

rollout status \

deployment go-demo-2-api

kubectl --namespace dev describe \

quota dev

违反 pod 数量

go-demo-2-scaled.yml

...

apiVersion: apps/v1beta2

kind: Deployment

metadata:

name: go-demo-2-api

spec:

replicas: 15

...

应用更改

kubectl --namespace dev apply \

-f go-demo-2-scaled.yml \

--record

kubectl --namespace dev get events

分析错误

kubectl describe namespace dev

已经达到 pods 数量上限。

kubectl get pods --namespace dev

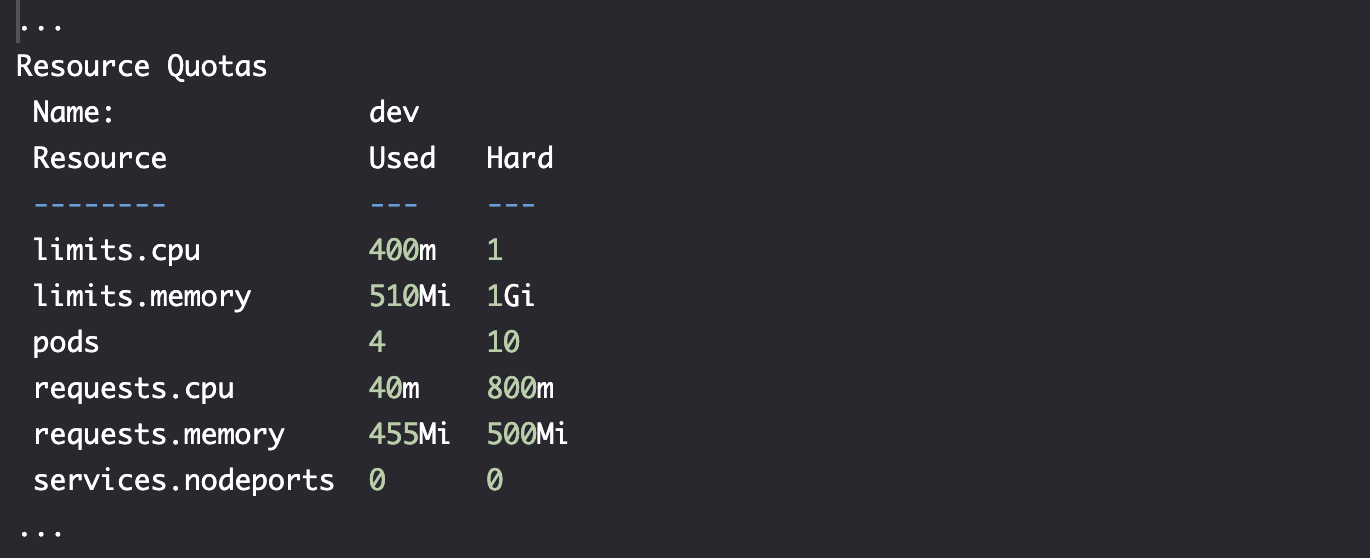

恢复到之前的定义

kubectl --namespace dev apply \

-f go-demo-2.yml \

--record

kubectl --namespace dev \

rollout status \

deployment go-demo-2-api

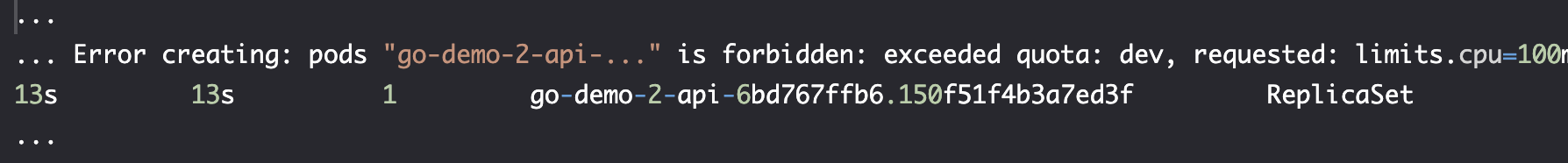

违反情况 2-内存配额

go-demo-2-mem.yml

...

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-demo-2-db

spec:

...

template:

...

spec:

containers:

- name: db

image: mongo:3.3

resources:

limits:

memory: "100Mi"

cpu: 0.1

requests:

memory: "50Mi"

cpu: 0.01

...

apiVersion: apps/v1

kind: Deployment

metadata:

name: go-demo-2-api

spec:

replicas: 3

...

template:

...

spec:

containers:

- name: api

...

resources:

limits:

memory: "200Mi"

cpu: 0.1

requests:

memory: "200Mi"

cpu: 0.01

...

应用更改

kubectl --namespace dev apply \

-f go-demo-2-mem.yml \

--record

kubectl --namespace dev get events \

| grep mem

Parse error

kubectl describe namespace dev

Revert back

kubectl --namespace dev apply \

-f go-demo-2.yml \

--record

kubectl --namespace dev \

rollout status \

deployment go-demo-2-api

违反情况 3-Services NodePort 配额

expose 更改 service 指令

kubectl expose deployment go-demo-2-api \

--namespace dev \

--name go-demo-2-api \

--port 8080 \

--type NodePort

定义-配额类型 Types of Quotas

1. Compute resource quotas

| Resource Name | Description |

|---|---|

cpu | Across all Pods in a non-terminal state, the sum of CPU requests cannot exceed this value. |

limits.cpu | Across all Pods in a non-terminal state, the sum of CPU limits cannot exceed this value. |

limits.memory | Across all Pods in a non-terminal state, the sum of memory limits cannot exceed this value. |

memory | Across all Pods in a non-terminal state, the sum of memory requests cannot exceed this value. |

requests.cpu | Across all Pods in a non-terminal state, the sum of CPU requests cannot exceed this value. |

requests.memory | Across all Pods in a non-terminal state, the sum of memory requests cannot exceed this value. |

2. Storage resource quotas

| Resource Name | Description |

|---|---|

requests.storage | Across all persistent volume claims, the sum of storage requests cannot exceed this value. |

persistentvolumeclaims | This is the total number of persistent volume claims that can exist in the namespace. |

[PREFIX]/requests.storage | Across all persistent volume claims associated with the storage-class-name, the sum of storage requests cannot exceed this value. |

[PREFIX]/persistentvolumeclaims | Across all persistent volume claims associated with the storage-class-name, the total number of persistent volume claims that can exist in the namespace. |

requests.ephemeral-storage | Across all Pods in the namespace, the sum of local ephemeral storage requests cannot exceed this value. |

limits.ephemeral-storage | Across all Pods in the namespace, the sum of local ephemeral storage limits cannot exceed this value. |

3. Object count quotas

| Resource Name | Description |

|---|---|

configmaps | The total number of ConfigMaps that can exist in the namespace |

persistentvolumeclaims | The total number of persistent volume claims that can exist in the namespace |

pods | The total number of Pods in a non-terminal state that can exist in the namespace. A Pod is in a terminal state if the status phase (Failed, Succeeded) is true. |

replicationcontrollers | The total number of replication controllers that can exist in the namespace |

resourcequotas | The total number of resource quotas that can exist in the namespace |

services | The total number of services that can exist in the namespace |

services.loadbalancers | The total number of Services of type load balancer that can exist in the namespace |

services.nodeports | The total number of Services of type node port that can exist in the namespace |

secrets | The total number of Secrets that can exist in the namespace |